MCP Foundations and Background

Publication Date: June 22, 2025

Introduction

The Model Context Protocol (MCP) represents a revolutionary approach to connecting AI systems with external tools and capabilities. This article summarizes the foundational concepts from Part 1 of the comprehensive MCP crash course, exploring why traditional context management techniques fall short and how MCP emerges as a robust solution.

Understanding MCP Through Analogy

Think of MCP like a universal translator. Imagine you only know English but need to communicate with people who speak French, German, and other languages. Without a translator, you'd need to learn each language individually—a nightmare scenario. However, with a translator:

- You speak to the translator in English

- The translator infers what information you need

- It selects the appropriate person to communicate with

- It returns the response in your language

MCP functions similarly, allowing AI agents to communicate with various tools and capabilities through a single, standardized interface.

The Context Management Challenge

Large Language Models (LLMs) face several fundamental limitations when it comes to context management:

Limited Context Windows

- Models have a maximum context length

- Information exceeding this window cannot be directly processed

- Critical data may be lost or truncated

Static Knowledge Base

- Models are trained on data up to a specific cutoff date

- They lack awareness of events beyond their training data

- Real-time information access requires external tools

Traditional Workarounds

Before MCP, developers relied on basic techniques that had significant limitations:

-

Truncation and Sliding Windows: Keeping recent dialogue while removing older context, potentially losing important information

-

Summarization: Condensing long documents into shorter forms, but introducing potential errors and losing critical details

-

Template-based Prompts: Using structured prompts with placeholders for context, but requiring developers to handle complex retrieval logic

Evolution of Pre-MCP Techniques

Static Prompting Era

Initially, using an LLM meant providing all necessary information in the prompt. If the model didn't know something (like current weather or live database records), there was no way for it to find out.

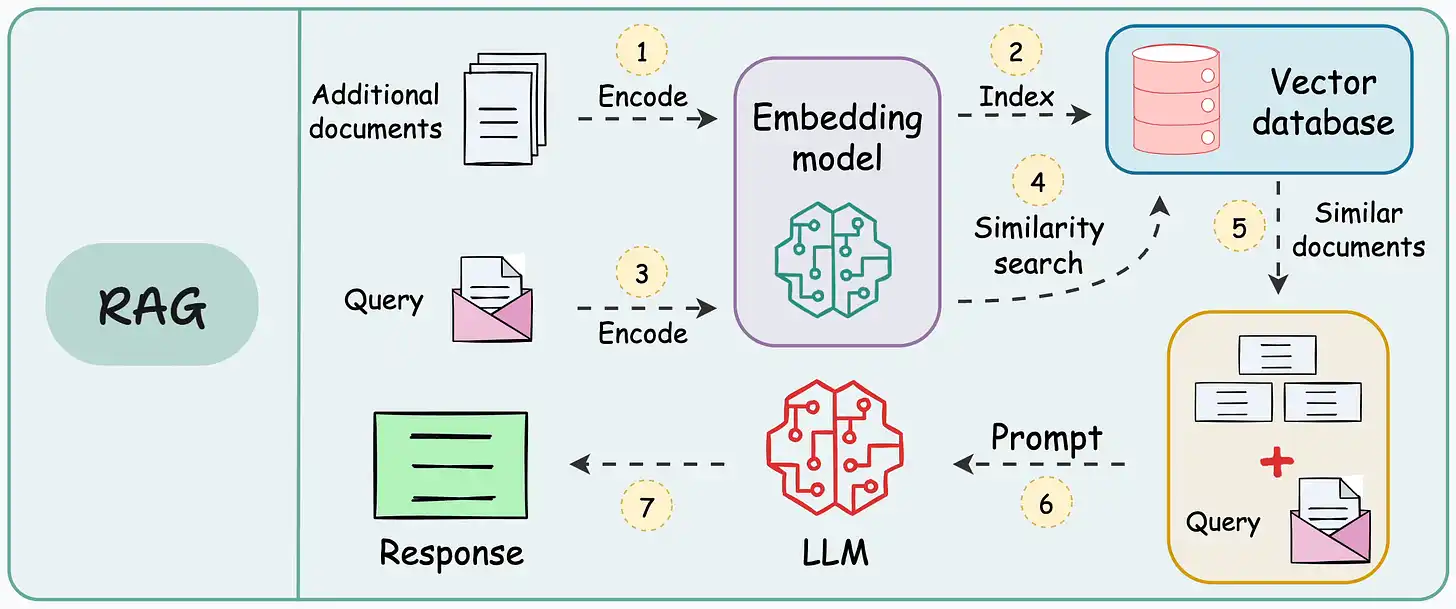

Retrieval-Augmented Generation (RAG)

RAG represented a significant advancement by allowing models to leverage external data through document retrieval. However, it had limitations:

- Models remained passive consumers of data

- Developers had to implement retrieval logic

- Primarily addressed knowledge lookup, not action execution

- No standardized approach across different implementations

Prompt Chaining and Agents

Advanced applications began using prompt chaining where models could output commands like SEARCH: 'weather in SF' which systems would interpret and execute. This ReAct (Reasoning + Action) pattern was powerful but had drawbacks:

- Ad-hoc and fragile implementations

- No common standards

- Each developer built custom chaining logic

- Difficult to generalize across different tools

Function Calling Mechanisms

In 2023, OpenAI introduced structured function calling, allowing developers to define functions that LLMs could invoke by name. This was more structured than plain text outputs but still lacked universal standardization.

MCP as the Universal Solution

MCP addresses these limitations by providing:

- Universal Connectivity: Acts like USB-C for AI systems, standardizing connections between models and capabilities

- Secure Communication: Provides a secure, standardized way for AI models to communicate with external data sources

- Dynamic Context: Dynamically supplies AI systems with relevant context, enhancing adaptability and utility

- Modular Architecture: Enables building modular AI workflows

Key Learning Objectives

By the end of this crash course series, you will understand:

- What MCP is and why it's essential for modern AI systems

- How MCP enhances context-awareness in AI applications

- How to dynamically provide AI systems with necessary context

- How to connect models to tools and capabilities via MCP

- How to build your own modular AI workflows using MCP

The Path Forward

This foundational understanding sets the stage for deeper exploration of MCP's architecture, implementation, and practical applications. The protocol represents a significant evolution in how AI systems interact with external resources, moving from fragmented, custom solutions to a unified, standardized approach.

MCP's importance lies not just in solving current limitations but in enabling new possibilities for AI system integration and capability extension. As AI applications become more complex and require real-time data access, MCP provides the infrastructure needed to build robust, scalable solutions.

Conclusion

The Model Context Protocol represents a paradigm shift in AI system architecture, moving from ad-hoc tool integration to a standardized, universal approach. By understanding the limitations of traditional context management and the evolution of pre-MCP techniques, we can better appreciate why MCP is essential for the future of AI applications.

As we continue through this crash course series, we'll explore MCP's technical architecture, implementation details, and practical applications that will enable you to build more capable and robust AI systems.

Note: This summary is based on the available preview content from the original article. The full article contains additional technical details and implementation examples available to subscribers.

Source: The Full MCP Blueprint: Background, Foundations, Architecture, and Practical Usage (Part A) - Daily Dose of Data Science

About the Author: Nawaf Alluqmani is a technology enthusiast specializing in AI and machine learning systems. This article is part of our ongoing series exploring cutting-edge AI technologies and their practical applications.